DroidForums.net | Android Forum & News

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

1% battery increments... am I the only one...

- Thread starter jtc303

- Start date

CaptainSeth

New Member

Nope...me too. I find myself watching it too much and convincing myself it's draining too fast. If I'm not too busy avoiding using my phone all together to try and keep the percents high...kind of silly, I know.

Who hates it?

Me! Precision and accuracy are two entirely different things. Precision is the difference between 10% increments and 1% increments. Accuracy is how close to 37% it really is when the meter shows 37%.

The problem with accuracy when it comes to theses batteries is that the difference between 20% and 10% in voltage drop (which is what meter readings are tied to), isn't the same amount of actual power consumption as from 70% to 60%. As a result, the phone's meter will show faster consumption in one part of the linear scale, and slower in another.

So looking at greater precision (1% increments) doesn't result in a more accurate representation of the battery's actual level of charge and can actually be far more misleading. By looking at wider sections of the battery consumption, the variances in the battery's non-linear discharge curve are more evenly represented and the result is less stress......................for you!

Sent from my DROID RAZR using Tapatalk 2

CaptainSeth said:Nope...me too. I find myself watching it too much and convincing myself it's draining too fast. If I'm not too busy avoiding using my phone all together to try and keep the percents high...kind of silly, I know.

Lol same here

Glad I'm not aloneFoxKat said:Me! Precision and accuracy are two entirely different things. Precision is the difference between 10% increments and 1% increments. Accuracy is how close to 37% it really is when the meter shows 37%.

The problem with accuracy when it comes to theses batteries is that the difference between 20% and 10% in voltage drop (which is what meter readings are tied to), isn't the same amount of actual power consumption as from 70% to 60%. As a result, the phone's meter will show faster consumption in one part of the linear scale, and slower in another.

So looking at greater precision (1% increments) doesn't result in a more accurate representation of the battery's actual level of charge and can actually be far more misleading. By looking at wider sections of the battery consumption, the variances in the battery's non-linear discharge curve are more evenly represented and the result is less stress......................for you!

Sent from my DROID RAZR using Tapatalk 2

Me! Precision and accuracy are two entirely different things. Precision is the difference between 10% increments and 1% increments. Accuracy is how close to 37% it really is when the meter shows 37%.

Well put FoxKat. Resolution creates precision, but not accuracy. A voltage readout mat say 5.01367 vdc but if the actual voltage is 4 vdc, the readout is useless, regardless of its very precise readout.

Well put FoxKat. Resolution creates precision, but not accuracy. A voltage readout mat say 5.01367 vdc but if the actual voltage is 4 vdc, the readout is useless, regardless of its very precise readout.

And likewise, resolution is another misunderstood term. As applied in this situation, the resolution of the stock battery meter is 10/100%, meaning 10 divisions (think pixels) across the entire range of usable battery power (think screen). So having more divisions lets you look at smaller and smaller portions.

It's sort of like a pizza pie. The pie can be cut into 8 sections like most pizza shops do, and when cut into 8s the slices are usually rather uniform in size. But Domino's is notorious for cutting their pies into 16 slices, and with those smaller slices comes greater potential for error. I often find one or more of those 16ths to be quite a bit larger or smaller than the rest.

Another analogy is a clock. Most clocks are divided into 12 numbered sections, hours from 1 to 12. Then there are often marks between each hour division, some only have half-hour divisions, some have quarter hour divisions, and some have minute divisions. Some clocks have ONLY 12, 3, 6 & 9, so you're only seeing "precision" or "resolution" of 4 over every 12 hours, and I've seen clocks that only have the 12 at the top. Then of course, there are clocks that have second hands and others that don't. The real question is how important in the grand scheme of things is it to know down to the second, what time it is? To the minute? Even to the quarter hour? Have you ever noticed how fast 15 minutes goes by?

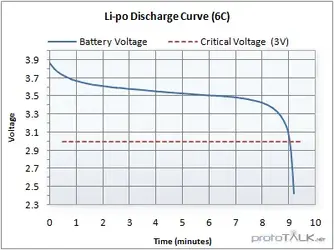

For our purposes, since the discharge curve of the battery's voltage runs logarithmic, rather than linear (meaning it doesn't decrease evenly across the entire discharge range), we call it a discharge "curve". If the "range" of voltages is from 4 to 3 (a very close approximation), and if each tenth of a volt (0.1) is used to identify 10%, then it also stands to reason that one on-hundredth of a volt (0.01) is used to identify 1%. But during the bulk of the discharge curve, from about 80% through about 20%, the voltages change very little, whereas in contrast during the top and bottom 20% ranges of the discharge curve, the voltages change quite significantly.

See the chart below to better understand.

If we were reading 1% increments through the middle, the phone would appear to be discharging VERY SLOWLY, but if we were reading 1% increments through the top and bottom 20% ranges, the phone would appear to be discharging rapidly. This causes confusion and is completely inaccurate a representation of the actual battery levels. It's a common complaint that the phones appear to be discharging very quickly from 100% to 90% or 80% and that chart is the reason why.

The system tries to compensate for this uneven or non-linear discharge cycle by taking note of the maximum charge level, the minimum charge level, and how long it takes to get from one to the other. This information is interpreted from the Meter Training I always talk so much about. It's not JUST that you set the Maximum charge and Minimum charge flags, but the discharge during the 2nd part of the training is KEY to the meter determining what 10% really looks like at any point in the discharge curve, so it can better use 10% to indicate a time/discharge factor, rather than just a voltage level change.

Unfortunately other factors play into the meter's interpretation of the data provided by looking at voltages over time, such as how fast the power is being used at any one time as opposed to another, like how more power is used when the phone is on and being used with the screen up, rather than sitting on the table or desk and remaining untouched for extended periods of time. This chart above shows a very smooth discharge curve, and the reason is because the rate of discharge for that chart is both VERY FAST (9 minutes from full to empty), which indicates a very high current draw (6C which means 6 times the total capacity of the battery...in the MAXX, that would be a draw rate of 19.8 amps!!).

Also the discharge rate on the chart above is the same current draw across the entire discharge curve. If you've seen some of the charts for my phone's consumption or have done any of your own, you'll notice the voltages are up and down, sometimes dramatically across the discharge curve, making it difficult - dare I say near impossible for the meter to be "accurately" representing the current battery levels of remaining charge at nearly any point. I'll add a chart below to show this from my phone. You'll see not a nice smooth line like the curve above, but something that looks like cactus needles sticking up and down. THIS is the MAIN reason why the meters get confused and start to show incorrect relative charge levels, and WHY it's so important to train the meter on a relatively infrequent basis.

zookeeper, you are partially right. First off, the RAZR is using a Lithium Ion Polymer Pouch Pack, which is NOT a smart battery. There is NO circuitry inside the battery to assist in the measurement of the battery's State of Charge, and only protection circuitry may exist to prevent deep discharge, and possibly also to prevent over-charge, as well as possibly thermal protection (though all of those things can be on-board in the phone instead, so there's no proof for or against that claim).

One thing is for sure, the Pouch Pack in our phones has only 2 (TWO) terminals, (+) & (-), and there are NO data terminals, so for any "information" that the battery could collect if it were a "smart battery", it would have no way to convey that information to the phone's charging system for processing. For our specific phones, the PHONE does ALL the charging and monitoring of the State of Charge for the battery.

Now to corroborate your claim, YES, coulomb counting is used, but it is used in combination with the voltage levels as well, so if the voltage levels are varying wildly, the result will vary as well. I used voltage as the talking point to keep it rather simple, but since you bring it up, here's the deep discussion.

From BatteryUniversity.com:

"Does the Battery Fuel Gauge Lie?

For reference, voltage chart for the last 6 hours on my phone. Note: this is a discharge curve (if you can call it that), and NO charging was performed during this 6 hours - in fact not in the last 32 hours. The variances in voltages are while being used the voltages drop, and while at rest they recover.

In the example below, you can see the voltages started out about 6 hours ago at 3.74V, and while at rest managed to recover to 3.78V, then it was used for about 7.3 minutes during 4 calls during which you can see the voltage dropped to nearly 3.7V. Afterwards during rest it managed to recover to just over 3.78V, then I had three calls totaling 6.15 minutes during which it fell again to nearly 3.76V. Then it sat and rested for over an hour and recovered to nearly 3.81V, and during the next 1.5 hours of moderately light use it fell to 3.71V, and finally recovered again during rest in the last .25 hours to 3.74V.

THIS is the PRIMARY reason why our meters get out of sync with the actual battery SoC.

I rest my case.

One thing is for sure, the Pouch Pack in our phones has only 2 (TWO) terminals, (+) & (-), and there are NO data terminals, so for any "information" that the battery could collect if it were a "smart battery", it would have no way to convey that information to the phone's charging system for processing. For our specific phones, the PHONE does ALL the charging and monitoring of the State of Charge for the battery.

Now to corroborate your claim, YES, coulomb counting is used, but it is used in combination with the voltage levels as well, so if the voltage levels are varying wildly, the result will vary as well. I used voltage as the talking point to keep it rather simple, but since you bring it up, here's the deep discussion.

From BatteryUniversity.com:

"Does the Battery Fuel Gauge Lie?

Why the Battery State-of-charge cannot be measured accurately

Measuring stored energy in an electrochemical device, such as a battery, is complex and state-of-charge (SoC) readings on a fuel gauge provide only a rough estimate. Users often compare battery SoC with the fuel gauge of a vehicle. Calculating fluid in a tank is simple because a liquid is a tangible entity; battery state-of-charge is not. Nor can the energy stored in a battery be quantified becauseprevailing conditions such as load current and operating temperature influence its release. A battery works best when warm; performance suffers when it is cold. In addition, a battery loses capacity through aging.

Current fuel gauge technologies are fraught with limitations and this came to light when users of the new iPad assumed that a 100 percent charge on the fuel gauge should also relate to a fully charged battery. This is not always so and users complained that the battery was only at 90 percent.

The modern fuel gauge used in iPads, smartphones and laptops read SoC through coulomb counting and voltage comparison. The complexity lies in managing these variables when the battery is in use. Applying a charge or discharge acts like a rubber band, pulling the voltage up or down, making a calculated SoC reading meaningless. In open circuit condition, as is the case when measuring a naked battery, a voltage reference may be used; however temperature and battery age will affect the reading. The open terminal voltage as a SoC reference is only reliable when including these environmental conditions and allowing the battery to rest for a few hours before the measurement.

In the case of the iPad, a 10 percent discrepancy between fuel gauge and true battery SoC is acceptable for consumer products. The accuracy will likely drop further with use, and depending on the effectiveness of a self-learning algorithm, battery aging can add another 20-30 percent to the error. By this time the user has gotten used to the quirks of the device and the oddity is mostly forgotten or accepted. While differences in the runtime cause only a mild inconvenience to a casual user, industrial applications, such as the electric powertrain in an electric vehicle, will need a better system. Improvements are in the work, and these developments may one day also benefit consumer products.

Measuring stored energy in an electrochemical device, such as a battery, is complex and state-of-charge (SoC) readings on a fuel gauge provide only a rough estimate. Users often compare battery SoC with the fuel gauge of a vehicle. Calculating fluid in a tank is simple because a liquid is a tangible entity; battery state-of-charge is not. Nor can the energy stored in a battery be quantified becauseprevailing conditions such as load current and operating temperature influence its release. A battery works best when warm; performance suffers when it is cold. In addition, a battery loses capacity through aging.

Current fuel gauge technologies are fraught with limitations and this came to light when users of the new iPad assumed that a 100 percent charge on the fuel gauge should also relate to a fully charged battery. This is not always so and users complained that the battery was only at 90 percent.

The modern fuel gauge used in iPads, smartphones and laptops read SoC through coulomb counting and voltage comparison. The complexity lies in managing these variables when the battery is in use. Applying a charge or discharge acts like a rubber band, pulling the voltage up or down, making a calculated SoC reading meaningless. In open circuit condition, as is the case when measuring a naked battery, a voltage reference may be used; however temperature and battery age will affect the reading. The open terminal voltage as a SoC reference is only reliable when including these environmental conditions and allowing the battery to rest for a few hours before the measurement.

In the case of the iPad, a 10 percent discrepancy between fuel gauge and true battery SoC is acceptable for consumer products. The accuracy will likely drop further with use, and depending on the effectiveness of a self-learning algorithm, battery aging can add another 20-30 percent to the error. By this time the user has gotten used to the quirks of the device and the oddity is mostly forgotten or accepted. While differences in the runtime cause only a mild inconvenience to a casual user, industrial applications, such as the electric powertrain in an electric vehicle, will need a better system. Improvements are in the work, and these developments may one day also benefit consumer products.

Coulomb counting is the heart of today’s fuel gauge. The theory goes back 250 years when Charles-Augustin de Coulomb first established the “Coulomb Rule.” It works on the principle of measuring in-and-out flowing currents. Coulomb counting also produces errors; the outflowing energy is always less than what goes in. Inefficiencies in charge acceptance, especially towards the end of charge, tracking errors, as well as losses during discharge and self-discharge while in storage contribute to this. Self-learning and periodic calibrations through a full charge/discharge assure an accuracy most can live with. "

For reference, voltage chart for the last 6 hours on my phone. Note: this is a discharge curve (if you can call it that), and NO charging was performed during this 6 hours - in fact not in the last 32 hours. The variances in voltages are while being used the voltages drop, and while at rest they recover.

In the example below, you can see the voltages started out about 6 hours ago at 3.74V, and while at rest managed to recover to 3.78V, then it was used for about 7.3 minutes during 4 calls during which you can see the voltage dropped to nearly 3.7V. Afterwards during rest it managed to recover to just over 3.78V, then I had three calls totaling 6.15 minutes during which it fell again to nearly 3.76V. Then it sat and rested for over an hour and recovered to nearly 3.81V, and during the next 1.5 hours of moderately light use it fell to 3.71V, and finally recovered again during rest in the last .25 hours to 3.74V.

THIS is the PRIMARY reason why our meters get out of sync with the actual battery SoC.

I rest my case.

Thanks again FoxKat, I really enjoy these discussions as it was my "bread and butter" for many years. When I was involved in the intersevice calibration (PMEL) school in Colorado during the 80's, we spent what most would think an exorbitant amount of time teaching the definitions of precision, accuracy, and resolution and thoroughly tested the student's understanding. You use great analogies, that are easy to understand. Of course, we used to use groupings of bullets on a target!!:biggrin:

WOW! Thanks for the complement. Bullets on a target are a great analogy as well. Resolution is probably the most widely misunderstood of all. Since it involves not only pixel density, but also pixel pitch, viewing distance, grid design (i.e. RGB Stripe/RGBG Pentile/RGBW Pentile, Diagonal OLPC, etc.), grid layout (i.e. parallel matrix, triad grouping), screen mask, screen dimension, and so much more.

I have to laugh every time I hear about the "Retina Display" on the iPhone, because it's been proven to be insufficient a resolution to qualify as "Retina" in resolution for those with perfect near-sighted vision. But what's even funnier is that at the typical viewing distance I use my Droid RAZR, mostly at near arm's length (aging eyes), I don't see any of the pixel definition that I read about people complaining of - "looking through a screen". If I put on a pair of 2X or 2.5X reading glasses and hold the phone less than a foot away, then I can see them, but that's not practical, so for me there's no appreciable difference between my Droid RAZR MAXX and my boss's iPhone 4S in apparent resolution.

I have to laugh every time I hear about the "Retina Display" on the iPhone, because it's been proven to be insufficient a resolution to qualify as "Retina" in resolution for those with perfect near-sighted vision. But what's even funnier is that at the typical viewing distance I use my Droid RAZR, mostly at near arm's length (aging eyes), I don't see any of the pixel definition that I read about people complaining of - "looking through a screen". If I put on a pair of 2X or 2.5X reading glasses and hold the phone less than a foot away, then I can see them, but that's not practical, so for me there's no appreciable difference between my Droid RAZR MAXX and my boss's iPhone 4S in apparent resolution.

WOW! Thanks for the complement. Bullets on a target are a great analogy as well. Resolution is probably the most widely misunderstood of all. Since it involves not only pixel density, but also pixel pitch, viewing distance, grid design (i.e. RGB Stripe/RGBG Pentile/RGBW Pentile, Diagonal OLPC, etc.), grid layout (i.e. parallel matrix, triad grouping), screen mask, screen dimension, and so much more.

I have to laugh every time I hear about the "Retina Display" on the iPhone, because it's been proven to be insufficient a resolution to qualify as "Retina" in resolution for those with perfect near-sighted vision. But what's even funnier is that at the typical viewing distance I use my Droid RAZR, mostly at near arm's length (aging eyes), I don't see any of the pixel definition that I read about people complaining of - "looking through a screen". If I put on a pair of 2X or 2.5X reading glasses and hold the phone less than a foot away, then I can see them, but that's not practical, so for me there's no appreciable difference between my Droid RAZR MAXX and my boss's iPhone 4S in apparent resolution.

Sorry, when I threw the term resolution out there, I was referring to readouts (ie. voltage). Another concept we had to make sure our people understood was exactly this. Take a voltmeter with a 5 1/2 digit display. When adjusting a circuit for 1 VDC, you could get the display to read 1.00000 VDC, leading you to believe you had made a very accurate adjustment. Actually, you made a very precise adjustment, because your reading was resolved to 10 uVDC (least significant digit = 0.00001 VDC). However if the cumulative error of your measurement (accuracy) was +/- 1 mVDC, then all you could certify was that you had adjusted the circuitry to 1 VDC +/- 1mVDC. So the actual voltage you adjusted to such a precise reading lays somewhere between 0.99900 and 1.00100 VDC. Seems simple, but many people had trouble grasping this concept. I am going back over 18 yrs, but I worked in calibration for over 20, and still have a bit of synaptic process left in this area, although as short lived at times as Edison's early attempts at light!:biggrin:

Sorry, I should have specified that you took 3 readings all measuring 1.00000 VDC after the adjustment. This makes the reading precise (repeatability). Maybe those cells ain't firing so good!:blink:

Sorry, when I threw the term resolution out there, I was referring to readouts (ie. voltage). Another concept we had to make sure our people understood was exactly this. Take a voltmeter with a 5 1/2 digit display. When adjusting a circuit for 1 VDC, you could get the display to read 1.00000 VDC, leading you to believe you had made a very accurate adjustment. Actually, you made a very precise adjustment, because your reading was resolved to 10 uVDC (least significant digit = 0.00001 VDC). However if the cumulative error of your measurement (accuracy) was +/- 1 mVDC, then all you could certify was that you had adjusted the circuitry to 1 VDC +/- 1mVDC. So the actual voltage you adjusted to such a precise reading lays somewhere between 0.99900 and 1.00100 VDC. Seems simple, but many people had trouble grasping this concept. I am going back over 18 yrs, but I worked in calibration for over 20, and still have a bit of synaptic process left in this area, although as short lived at times as Edison's early attempts at light!:biggrin:

And HEY, I knew what you were saying...but most don't think of resolution as the ranges or precision on a digital or analog volt meter. Also it's quite obvious with the post I made a couple hours ago where;

"In the case of the iPad, a 10 percent discrepancy between fuel gauge and true battery SoC is acceptable for consumer products. The accuracy will likely drop further with use, and depending on the effectiveness of a self-learning algorithm, battery aging can add another 20-30 percent to the error."

This means a 1% precision is overridden by as much as from 10% to 40%, or a reading of let's say 53% battery level can be off by as much as 43 points or it could be 10% or 96% in all actuality. Knowing that, what is the benefit of having a 1% precision? None for practical purposes.

Similar threads

- Replies

- 8

- Views

- 6K

- Replies

- 7

- Views

- 3K